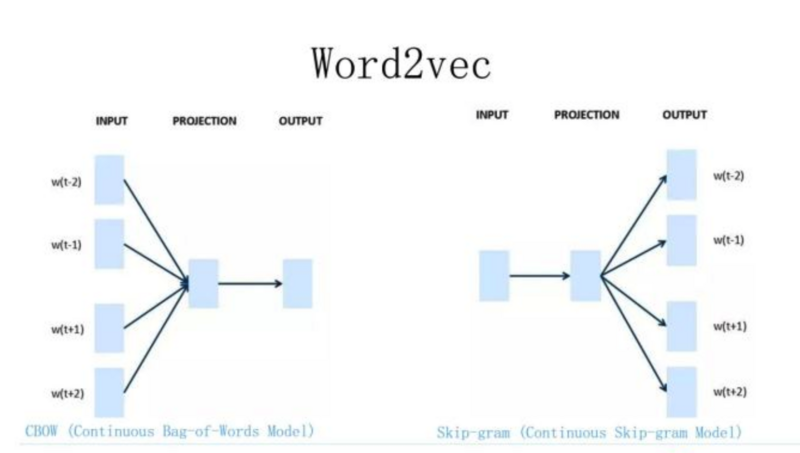

Word2Vec – CBOW and Skip-Gram

CBOW learns to predict the word by the context window(+nt -nt words) around it by taking the max probability of the word that fits this context(the most frequent word it learns).Therefore words with infrequent values will not work great with this technique. Skip-Gram is inverted to CBOW, thus learns toContinue Reading

LinkedIn

LinkedIn